🍽️ NakMakanApa – A Generative AI Companion for Personalized Meal Discovery

🧠 Introduction

Every Malaysian has faced the timeless question: “Nak makan apa?” ("What do I want to eat?"). Whether you're a busy parent, a health-conscious professional, or someone simply staring at a fridge of random ingredients, deciding what to eat can be surprisingly stressful.

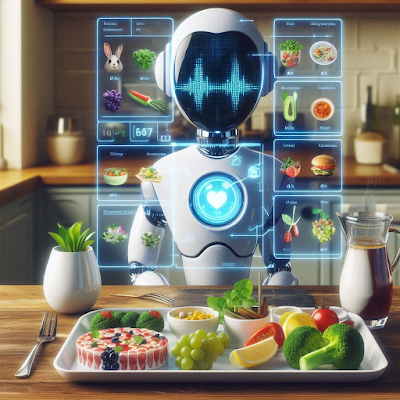

NakMakanApa is a Generative AI-powered food recommendation system that turns that daily dilemma into an intelligent, personalized experience. Built as part of the GenAI Intensive Course Capstone Project, this solution blends natural language understanding, image recognition, and AI-driven reasoning to guide users toward delicious, healthy, and culturally relevant meals.

🌟 What Makes NakMakanApa Special?

This isn’t just a recipe finder. NakMakanApa is a full-featured AI agent capable of:

-

🗣️ Understanding user prompts like “saya nak makanan pedas dan sihat untuk jantung” (I want something spicy and heart-healthy)

-

🖼️ Interpreting images of fridge contents to identify usable ingredients

-

🧠 Personalizing meal suggestions based on preferences, health goals, and local cuisine

-

📦 Fetching recipes via vector search and fallback to Gemini LLM if needed

-

🧾 Generating structured summaries and advice about the meal’s health benefits

-

📄 Exporting everything as a clean PDF or TXT document

🔧 How It Works – The Process

The project unfolds in 8 major steps:

-

Prepare a structured recipe dataset as a DataFrame (

df_recipes) -

Install and configure required libraries (LangChain, FAISS, Gemini SDK, YOLOv8, etc.)

-

Allow image input – users can upload a fridge/ingredient photo

-

Detect ingredients via YOLOv8 and match them with user preferences (multilingual supported!)

-

Search local FAISS vector index for best recipe matches

-

Fallback to Gemini if no good match is found, auto-updating the dataset & vector DB

-

Summarize recipe & highlight health benefits, giving users a clear reason for the recommendation

-

Generate PDF or TXT output, with beautiful formatting, for sharing or saving offline

💡 GenAI Capabilities Applied

| Capability | Application |

|---|---|

| 📊 Structured Output / JSON Mode | Recipes returned in structured JSON: title, ingredients, steps, tags |

| 🧠 Image Understanding | YOLOv8 used to detect food from photos |

| 📚 Retrieval-Augmented Generation (RAG) | Blend of FAISS vector search with Gemini fallback |

| 🔍 Vector Store | Efficient similarity search on recipes using semantic embeddings |

| 🤖 Agents (Bonus) | The system behaves like an AI agent orchestrating input parsing, generation, and summarization seamlessly |

🧪 Results & Achievements

✅ Users can receive personalized meal suggestions from both text and image input

✅ Recipes include clear, AI-generated summaries explaining their health value

✅ The system handles English and Malay prompts with ease

✅ Users can export results to PDF, making meal planning effortless

✅ Every process includes robust error handling for reliability

🚀 What’s Next?

This project is just the beginning. Future enhancements include:

-

📱 A mobile app version for everyday usage

-

🧮 Nutritional analysis (calories, macros, allergens)

-

🛒 Smart shopping list generation

-

🌏 Expansion to Middle Eastern and global cuisines

-

👩🍳 Community recipes and feedback-driven learning

👨🎓 Final Thoughts

NakMakanApa is more than a technical showcase—it’s a vision for how Generative AI can enrich daily life in culturally meaningful and health-conscious ways. By combining NLP, computer vision, RAG, and AI reasoning into a seamless user flow, we created an experience that feels human, helpful, and very Malaysian.

This project proves that AI can do more than automate tasks—it can guide, support, and inspire better living.

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)

.jpeg)